Advertisement

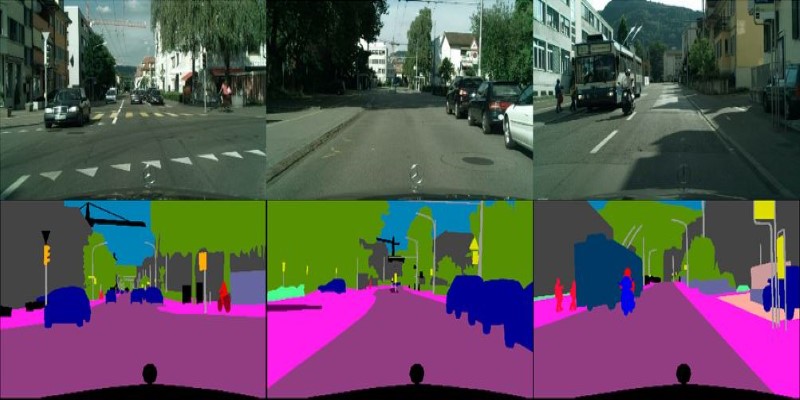

Image segmentation sounds technical, but it’s just the task of breaking an image into regions that mean something. Think of it as coloring different parts of an image so a computer can recognize them—like distinguishing a cat from a couch in a photo. The need for machines to do this accurately is growing fast in areas like medical imaging, satellite photo analysis, and automated inspection. UNet, a deep learning model developed specifically for this task, has become a standout. Its design may look complex at first, but once broken down, it’s surprisingly straightforward and practical.

UNet was introduced in 2015 by Olaf Ronneberger and his colleagues for biomedical image segmentation. The goal was to create a model that could work well even with a limited number of annotated images. That alone sets it apart, as many deep learning models rely on massive labeled datasets to perform well.

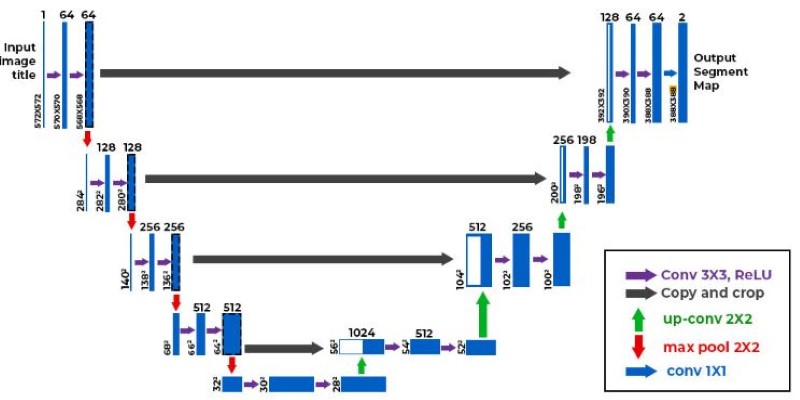

The architecture of UNet follows a symmetrical shape—often described as a “U” because of how the data flows through it. The left half is a downsampling path (called the encoder), which reduces the image size and extracts high-level features. This is done using repeated combinations of convolutional layers and pooling operations. The right half is an upsampling path (decoder), which reconstructs the image back to its original size while making pixel-level predictions about which part belongs to which object.

What makes UNet unique is the skip connections that link the encoder and decoder at each level. These connections bring in high-resolution features lost during downsampling, helping the model retain important spatial information. This is especially useful when every pixel matters, such as outlining the boundaries of a tumor or identifying roads from satellite images.

Another reason UNet works so well for segmentation is its full convolutional nature—meaning it can handle inputs of various sizes without requiring a fixed input shape. That flexibility adds to its practicality, especially when working with real-world data.

At a technical level, image segmentation is about classification—but not just of entire images. Each pixel gets its label. This is much harder than just recognizing that a picture contains a car; the model must identify which specific pixels are part of that car and which are not.

UNet handles this through a combination of encoding the "what" and decoding the "where." The encoder processes the image through several layers to understand complex features, including shapes, edges, and patterns. As the image moves through these layers, it becomes smaller but richer in meaning. The decoder then gradually upsamples this compact data back to the original image dimensions while using the skip connections to preserve detail.

Let’s say you have an image of cells. You want to isolate each one for analysis. Traditional object detection models might just put a box around them. But in medical research, that’s not helpful—you need precise boundaries. UNet excels here because it works at the pixel level and retains both context and detail.

Training UNet typically involves a loss function that compares the predicted mask to the actual labeled mask. Common choices include cross-entropy loss or Dice coefficient loss, which measures the overlap between predicted and actual regions. This helps the model learn how to draw more accurate boundaries as training progresses.

It’s also worth noting that UNet can be used with data augmentation—rotating, flipping, or scaling images during training—to overcome small dataset limitations. This feature was a major reason for its success in medical tasks, where large labeled datasets are rare.

UNet has been adopted in a wide range of fields beyond its medical roots. In agriculture, it helps identify crop boundaries from aerial imagery. In autonomous driving, it can separate lanes, pedestrians, and road signs from background clutter. In industrial settings, it’s used to detect defects on production lines. The consistent factor across all these uses is the need for pixel-level precision.

UNet models are often implemented using frameworks like TensorFlow or PyTorch, and open-source versions are widely available. This has helped speed up experimentation and deployment, especially in research and prototyping environments. Despite the technical depth involved, UNet remains approachable, especially for those familiar with convolutional neural networks.

With tools like UNet, learning image segmentation becomes less about memorizing theory and more about understanding how each part of the model contributes to the final output. Once you understand the interplay between the encoder, decoder, and skip connections, it becomes easier to tune the model for specific tasks. Learning image segmentation also benefits from visual feedback. Unlike classification, where results are just numbers, segmentation outputs can be overlaid on the original image, making it easier to spot where the model performs well or needs improvement. This visual nature makes it one of the more intuitive deep-learning tasks to troubleshoot and refine.

But using UNet isn’t without its challenges. While the original version is quite lightweight compared to other deep learning models, its accuracy can be further improved by modifications. Variants like UNet++ and Attention UNet build on the original by adding extra layers or attention mechanisms to refine predictions. These tweaks often lead to better results but require more computational resources and longer training times.

Another practical challenge is annotation. Image segmentation needs pixel-wise labels, which are time-consuming and costly to produce. Unlike classification tasks, where one label per image is enough, segmentation needs every pixel to be marked. Some newer techniques, such as weakly supervised or semi-supervised learning, aim to reduce the burden of labeling; however, these are still maturing and often come with trade-offs in performance or reliability.

UNet has made image segmentation accessible and practical by combining precision with a simple yet effective design. Its use of skip connections and full convolutional structure allows for detailed pixel-level labeling, even with limited data. While challenges like labeling effort and compute needs exist, UNet remains one of the most effective tools for learning image segmentation, especially in fields where visual accuracy directly supports research, analysis, or decision-making.

Advertisement

Automation Anywhere uses AI to enhance process discovery, enabling faster insights, lower costs, and scalable transformation

What if your AI could actually get work done? Hugging Face’s Transformer Agent combines models and tools to handle real tasks—file, image, code, and more

Find why authors are demanding fair pay from AI vendors who are using their work without proper consent or compensation.

AI groups tune large language models with testing, alignment, and ethical reviews to ensure safe, accurate, and global deployment

How Vision Transformers (ViT) are reshaping computer vision by moving beyond traditional CNNs. Learn how this transformer-based model works, its benefits, and why it’s becoming essential in image processing

Want to save time processing forms? Discover how Azure Form Recognizer extracts structured data from documents with speed, accuracy, and minimal setup

Explore how AI innovates the business world and what the future of AI Transformation holds for the modern business world

How Google Bard’s latest advancements significantly improve its logic and reasoning abilities, making it smarter and more effective in handling complex conversations and tasks

Discover how Dremio uses generative AI tools to simplify complex data queries and deliver faster, smarter data insights.

Google Cloud’s new AI tools enhance productivity, automate processes, and empower all business users across various industries

Lensa AI’s viral portraits raise concerns over user privacy, data consent, digital identity, representation, and ethical AI usage

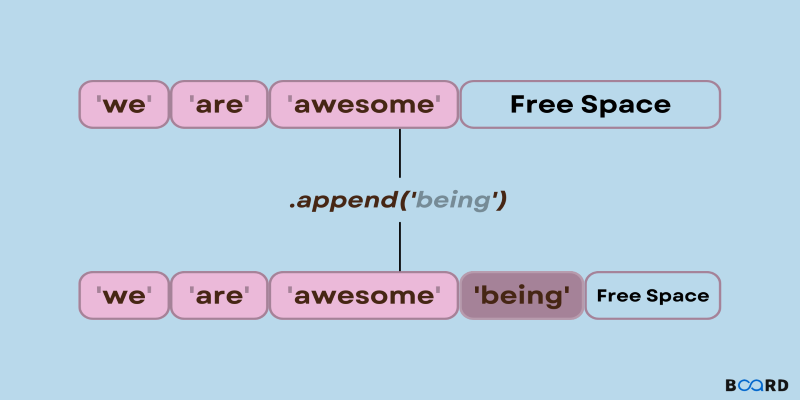

Need to add items to a Python list? Learn how append() works, what it does under the hood, and when to use it with confidence