Advertisement

For years, machines learned to see using convolutional neural networks—layered systems that focused on small image regions to build understanding piece by piece. But what if a model could take in the entire image at once, seeing how every part relates to the whole right from the start? That’s the idea behind Vision Transformers (ViTs).

Borrowing the transformer concept from language models, ViTs process images as sequences of patches, not as pixel grids. This change in perspective is reshaping how visual data is handled, offering new possibilities in accuracy, flexibility, and how models learn to “see” the world.

Vision Transformers begin by breaking an image into fixed-size patches—much like slicing a photo into small squares. Each patch is flattened into a 1D vector and passed through a linear layer to form a patch embedding. These are combined with positional encodings, which help the model understand the position of each patch within the original image.

This sequence of patch embeddings is then fed into a transformer encoder, similar to those used in language models. The encoder uses self-attention layers, allowing each patch to relate to every other patch directly. This ability to handle global information from the start is a major shift from CNNs, which need several layers to achieve something similar.

A class token is added at the beginning of the sequence. After the transformer layers process everything, the output from this token is used to make predictions. This token gathers information from the entire image, making it suitable for tasks like classification.

ViTs don't rely on spatial hierarchies the way CNNs do, which means they make fewer assumptions about the structure of images. That flexibility is particularly useful in tasks where global relationships are more important than local features.

One strength of Vision Transformers is how they handle long-distance relationships in an image. While CNNs build this understanding gradually, ViTs do it in one step using self-attention. This gives them an edge in situations where the layout or overall composition matters.

ViTs also make it easier to apply the same model to different types of data. Since the architecture isn’t tailored specifically to images, it adapts well to other formats, including combinations of text and visuals. This is especially useful in models designed for multi-modal tasks, where consistency across inputs matters.

But there are trade-offs. ViTs need a lot more data to perform well from scratch. CNNs are better at generalizing with smaller datasets because of their built-in assumptions about image structure. ViTs, being more general-purpose, depend heavily on large datasets like ImageNet or JFT-300M for pretraining.

They also use more computational resources. The attention mechanism processes all patch pairs, which can get expensive, especially for high-resolution images. This makes training slower and more memory-heavy compared to CNNs.

To balance this, hybrid models have been developed. These use CNNs for early layers to capture low-level patterns, followed by transformer layers for global understanding. This approach reduces training costs while keeping many of the benefits of self-attention.

Vision Transformers began with classification tasks, where they performed surprisingly well—especially when trained on large datasets. They've since moved into more complex areas like object detection and segmentation.

In object detection, models like DETR (DEtection TRansformer) streamline the process. Traditional methods use anchor boxes and region proposals, which involve multiple stages. DETR replaces that with a transformer-based structure, producing cleaner and simpler outputs with fewer components.

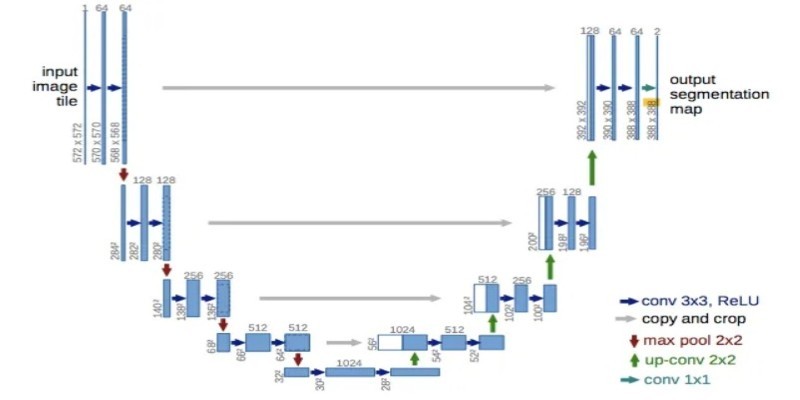

For segmentation tasks, ViTs are utilized in models such as Segmenter and SETR. These models take advantage of the transformer's ability to combine local details and global layouts, making them good at separating objects in an image.

ViTs are also being used in medical imaging, where fine-grained detail across wide areas is critical. They show promise in detecting patterns in MRI scans, X-rays, and pathology slides. In video analysis, time is treated as a third dimension alongside spatial information, making transformers useful for understanding motion and sequences.

Several ViT variants have emerged to improve efficiency. Swin Transformer, for example, limits self-attention to local windows, which reduces computation while keeping useful context. Other versions use hierarchical structures or different patch sizes to better handle various tasks.

These adaptations help tailor Vision Transformers to real-world applications, where efficiency and accuracy must coexist.

Vision Transformers are part of a larger shift in AI toward general-purpose models that rely more on data and less on hand-tuned design. Their ability to work across different domains and handle global structures from the start makes them a strong alternative to CNNs.

As trained ViTs become more available, it's easier for developers to use them without huge computational resources. This helps expand their use beyond large research labs and into more practical applications. The line between language and vision models is also starting to blur. Unified models that handle both types of input, like CLIP and Flamingo, are becoming more common.

There’s still room to improve. Making ViTs more data-efficient, easier to interpret, and less dependent on massive pretraining remains a focus. But their progress so far suggests they’re not a passing trend. They’re changing how visual tasks are approached—and opening up new ways to think about image processing altogether.

Vision Transformers represent a turning point in how machines process images. Instead of relying on hand-crafted patterns and local operations, they take a broader view from the start. Their use of self-attention enables a deeper understanding of image-wide relationships, which in turn changes what is possible in visual tasks. While they require more data and computation up front, their performance across tasks and flexibility make them worth the investment. As research continues, ViTs are likely to become even more central in computer vision, with more efficient models and broader use in fields that rely on visual understanding. Their influence is only growing.

Advertisement

How UNet simplifies complex tasks in image processing. This guide explains UNet architecture and its role in accurate image segmentation using real-world examples

How to automate data analysis with Langchain using language models, custom tools, and smart chains. Streamline your reporting and insights through efficient Langchain data workflows

Discover how resume companies are using non-biased generative AI to ensure fair, inclusive, and accurate hiring decisions.

Discover 7 effective ways to accelerate AI software development and enhance speed, scalability, and innovation in 2025.

Want to save time processing forms? Discover how Azure Form Recognizer extracts structured data from documents with speed, accuracy, and minimal setup

Explore how AI innovates the business world and what the future of AI Transformation holds for the modern business world

Compare Microsoft, Copilot Studio, and custom AI to find the best solution for your business needs.

Salesforce advances secure, private generative AI to boost enterprise productivity and data protection

Discover how Dremio uses generative AI tools to simplify complex data queries and deliver faster, smarter data insights.

Celonis faces rising competition by evolving process mining with real-time insights, integration, and user-friendly automation

What if your AI could actually get work done? Hugging Face’s Transformer Agent combines models and tools to handle real tasks—file, image, code, and more

Find how AI is transforming the CPG sector with powerful applications in marketing, supply chain, and product innovation.