Advertisement

When it comes to AI models, Hugging Face isn’t just another name in the room—it’s the one that’s almost always doing something quietly clever. And just recently, they dropped something new: Transformer Agent. No dramatic announcements, no loud claims. Just a practical tool that might quietly reshape how you use large language models. So, if you’ve been wondering what’s under the hood and whether it’s worth your attention, here’s a closer look that sticks to what matters.

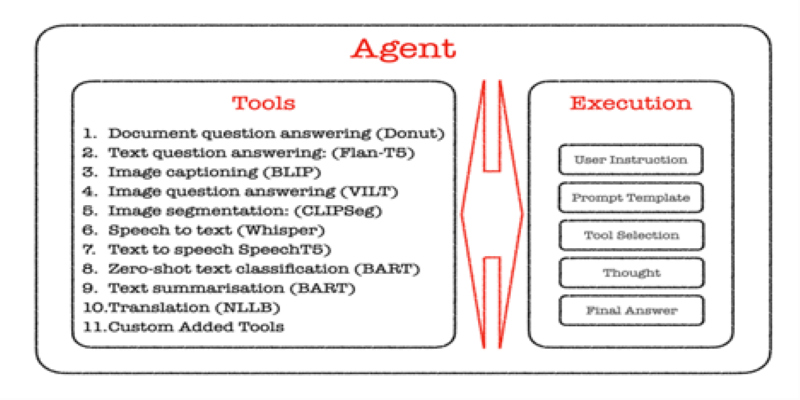

Let's start with the basics. Transformer Agent isn't a model itself—it's more like a system built on top of models. Think of it as a reliable coordinator who knows how to ask the right questions, fetch the right tools, and use those tools to get a task done. It doesn't rely on one single model or technique. Instead, it combines multiple pre-built tools, lets a language model figure out which ones to use, and executes complex tasks step by step.

This isn’t about doing more of the same. It’s about getting specific work done—image analysis, file processing, Python code execution, and more—by breaking it down into pieces and calling the right function at the right time.

It’s like handing over a full toolbox to a capable assistant who not only knows what each tool does but knows when and how to use each one. The result? Tasks that would normally need you to hop between platforms, write custom code, or do repetitive searches can now be handled in a single flow.

Here’s where it gets interesting. Most models today are trained to predict the next word. Hugging Face took that ability and gave it an actual job. Transformer Agent uses a language model (usually a version of OpenAssistant or similar) and wraps it in a mechanism where it can use tools like image classifiers, code interpreters, or document readers.

It reads your input, decides which tool fits best, and then executes that tool through what Hugging Face calls “tools-as-functions.” Each tool is registered with a description and function, which the model reads like a manual. But instead of just reading the manual, it actually runs the functions.

Let’s say you upload a PDF and ask the agent to extract data and run calculations on it. Instead of you writing a script or copying things manually, the agent picks the document loader, extracts the content, uses the math tool, and gives you back the numbers—all within the same response loop. It’s not reinventing the model—it’s giving it arms and legs.

Hugging Face didn’t just build the agent and call it a day. They shipped it with a suite of ready-to-use tools, each one aimed at a different type of task. The variety here is the real strength.

File and Document Tools: These tools make file handling less of a hassle. Whether it’s PDFs, CSVs, or plain text, the agent knows how to read, interpret, and process them. If you often deal with documents or structured data, this cuts out several steps from your workflow.

Image and Vision Tools: Got an image you want to analyze? There’s no need to upload it to a separate vision model. Transformer Agent can run image classification, caption generation, or even object detection right there in the flow.

Code Tools: The built-in code execution feature isn’t a gimmick—it actually works. You can ask for a custom Python script, run it, and see the result without leaving the interface. It can run calculations, plot charts, and more. No need to copy code to a separate IDE.

Search and API Wrappers: Need up-to-date info? The agent can use search tools to bring in web results and feed them back into its chain of thought. It can also use APIs as needed. This gives it a way to step outside of static model training and fetch real-time answers.

Together, these tools form a loop: read input → reason → fetch data, run task → generate output. The whole process feels natural, almost like asking a knowledgeable assistant who happens to have coding skills, internet access, and image recognition built-in.

A lot of AI tools today look impressive in demos but feel a bit limited once you start using them. Transformer Agent, on the other hand, feels like it’s built to handle the not-so-glamorous tasks that eat up your time. Here’s how that plays out in real scenarios:

Instead of converting a file, uploading it to a third-party tool, and then parsing the results, you can feed it directly to the agent. It figures out the structure and gives you clear answers. For folks working with research, finance, or policy documents, this isn't just helpful—it’s efficient.

You can ask questions about images and get detailed, contextual answers. Want to know how many people are in a photo or whether a medical scan shows certain patterns? The agent knows how to pair image models with language outputs, giving you more than just a label.

Instead of hopping into a Jupyter notebook, you can ask the agent to solve a problem, see the output, and even iterate on it. It’s not meant to replace developers, but it does speed up the early phases of experimentation or data analysis.

Sometimes, you don’t need just one answer—you need a few steps to get there. The agent uses search tools not just to pull in data but to interpret it and connect the dots. So you’re not just getting links—you’re getting answers that already consider what those links say.

Transformer Agent isn’t trying to be flashy. It’s not out to become your new best friend or make sweeping claims about AI’s future. Instead, it’s doing something a bit more grounded: helping you get work done with fewer clicks, fewer tools, and less guesswork. If you’ve used large language models before and wished they could follow through on what they start, Transformer Agent is Hugging Face’s answer to that wish. It’s smart, structured, and—more importantly—it works quietly in the background to make your workflow smoother.

And while it may not come with the hype of some newer releases, it might just end up being the tool you actually reach for when you need results without distractions.

Advertisement

How Netflix Case Study (EDA) reveals the data-driven strategies behind its streaming success, showing how viewer behavior and preferences shape content and user experience

Are you overestimating your Responsible AI maturity? Discover key aspects of AI governance, ethics, and accountability for sustainable success

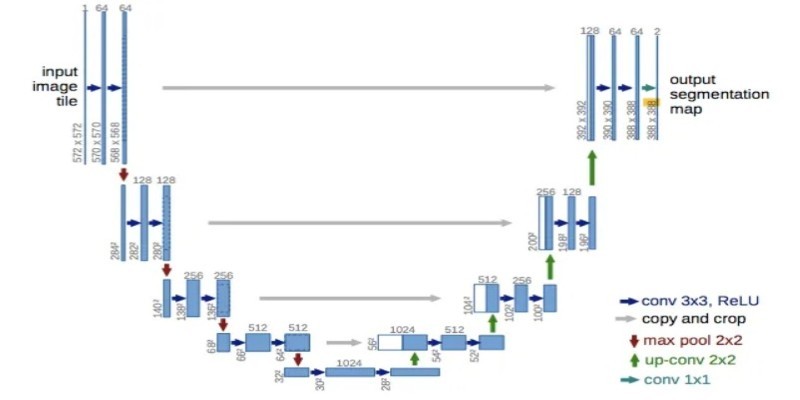

How UNet simplifies complex tasks in image processing. This guide explains UNet architecture and its role in accurate image segmentation using real-world examples

Find how AI is transforming the CPG sector with powerful applications in marketing, supply chain, and product innovation.

Intel’s deepfake detector promises accuracy but sparks ethical debates around privacy, data usage, and surveillance risks

Salesforce advances secure, private generative AI to boost enterprise productivity and data protection

Discover how resume companies are using non-biased generative AI to ensure fair, inclusive, and accurate hiring decisions.

How depth2img pre-trained models improve image-to-image generation by using depth maps to preserve structure and realism in visual transformations

Lensa AI’s viral portraits raise concerns over user privacy, data consent, digital identity, representation, and ethical AI usage

Master GPT-4.1 prompting with this detailed guide. Learn techniques, tips, and FAQs to improve your AI prompts

How to automate data analysis with Langchain using language models, custom tools, and smart chains. Streamline your reporting and insights through efficient Langchain data workflows

How Google Bard’s latest advancements significantly improve its logic and reasoning abilities, making it smarter and more effective in handling complex conversations and tasks