Advertisement

As Artificial Intelligence (AI) continues to change industries, organizations are increasingly prioritizing responsible AI practices to ensure their AI systems are ethical, transparent, and equitable. However, many businesses may be overestimating their AI maturity when it comes to handling these complex challenges. Achieving true Responsible AI maturity is not just about implementing AI technology but also about creating a framework that ensures AI operates responsibly, minimizes risks, and upholds ethical standards.

While many companies feel confident about their AI capabilities, they may overlook or underestimate critical aspects of responsible AI, such as ensuring fairness, mitigating biases, and creating proper governance and continuous monitoring. This blog will explore the challenges organizations face when building responsible AI systems, the potential risks of overestimating AI maturity, and practical steps for enhancing AI maturity within your organization.

Responsible AI maturity refers to an organization's ability to design, deploy, and manage AI systems in a way that aligns with ethical principles and societal values. Achieving this maturity involves more than just technological implementation; it requires careful consideration of how AI models are developed, how data is handled, and the impact of AI on people and society.

There are several key components of Responsible AI maturity:

To achieve Responsible AI maturity, organizations need a structured approach that includes proper governance, regular audits, and continuous monitoring of AI systems to ensure they grow responsibly.

Many organizations believe they have achieved Responsible AI maturity, but several common misunderstandings can lead to overestimations. Let's examine some of the key areas where organizations frequently fall short.

Achieving Responsible AI maturity comes with its own set of challenges. Although many organizations are eager to implement AI, the path to deploying responsible and ethical AI is not always straightforward. Below are some of the key obstacles that companies face when striving to improve their AI maturity.

To avoid overestimating your Responsible AI maturity, organizations must actively work to improve their processes, frameworks, and oversight mechanisms. Here are practical steps to enhance Responsible AI maturity and ensure a more ethical and accountable AI journey.

Achieving Responsible AI maturity is a journey, not a destination. While many organizations may feel confident about their AI systems, it's easy to overlook the complexities involved in maintaining ethical, transparent, and fair AI practices. Overestimating AI maturity can lead to severe consequences, including biased decisions, privacy violations, and a loss of public trust.

To truly succeed in implementing Responsible AI, organizations must continually assess their processes, prioritize ethical considerations, and ensure they have the necessary governance, resources, and talent in place to support this industry.

Advertisement

Compare Microsoft, Copilot Studio, and custom AI to find the best solution for your business needs.

Want to save time processing forms? Discover how Azure Form Recognizer extracts structured data from documents with speed, accuracy, and minimal setup

How Google Bard’s latest advancements significantly improve its logic and reasoning abilities, making it smarter and more effective in handling complex conversations and tasks

Find how AI is transforming the CPG sector with powerful applications in marketing, supply chain, and product innovation.

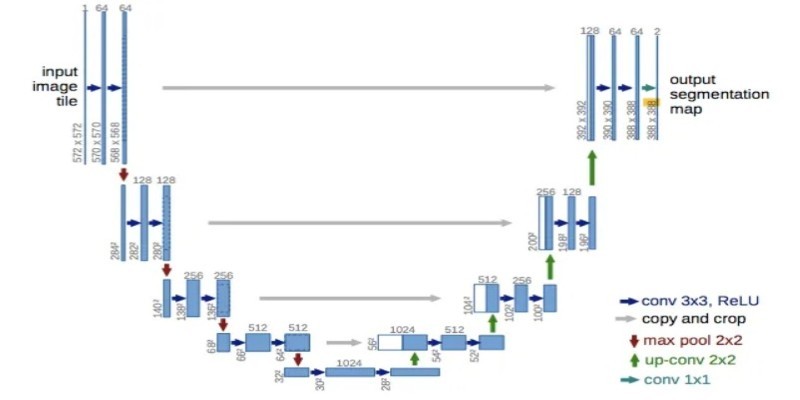

How UNet simplifies complex tasks in image processing. This guide explains UNet architecture and its role in accurate image segmentation using real-world examples

Lensa AI’s viral portraits raise concerns over user privacy, data consent, digital identity, representation, and ethical AI usage

AI groups tune large language models with testing, alignment, and ethical reviews to ensure safe, accurate, and global deployment

How Vision Transformers (ViT) are reshaping computer vision by moving beyond traditional CNNs. Learn how this transformer-based model works, its benefits, and why it’s becoming essential in image processing

Oracle Cloud Infrastructure boosts performance by integrating Nvidia GPUs and AI-powered solutions for smarter workloads

Master GPT-4.1 prompting with this detailed guide. Learn techniques, tips, and FAQs to improve your AI prompts

What if your AI could actually get work done? Hugging Face’s Transformer Agent combines models and tools to handle real tasks—file, image, code, and more

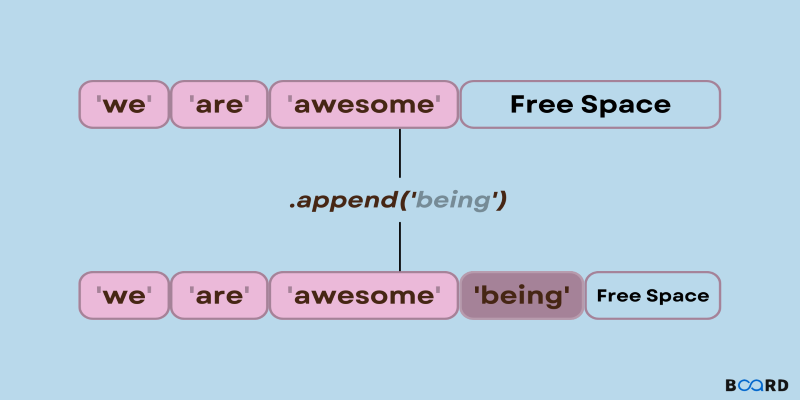

Need to add items to a Python list? Learn how append() works, what it does under the hood, and when to use it with confidence