Advertisement

Data analysis is often a mix of repetitive steps, exploratory thinking, and writing code that doesn’t change much across projects. You might spend hours cleaning up datasets, building summaries, answering common questions, or generating reports. This process can feel like you're reinventing the wheel each time. That’s where Langchain becomes useful.

It acts as a bridge between large language models (LLMs) and your data workflows. Rather than relying entirely on manual code or human-driven insight, Langchain helps you build workflows where an LLM can understand questions, pull the right data, and return answers—automatically. And this goes far beyond simple querying.

Langchain is a framework that enables developers to connect LLMs, such as GPT-4, to various tools, including databases, APIs, file systems, and custom scripts. The core idea is that instead of just asking the model a question in a chat and stopping there, you can build chains of thought and action. These chains combine reasoning with external tools to give more useful answers.

For data analysis, this means a user could ask, "What were the top-selling items last month by region?" and Langchain can take that question, parse the intent, call a SQL database, filter the results, and provide a structured summary—without writing or pasting any SQL. The value here is that you can build systems where human-like requests are translated into action. That's different from a chatbot or simple interface. Langchain allows you to plug in logic, memory, data sources, and processing steps that normally require custom scripting.

There are three core pieces of Langchain that make this work: prompts, tools, and chains. Prompts help shape how the LLM responds, tools give the model external capabilities (like running Python or calling an API), and chains let you link tasks together. For automated data analysis, you can chain together a natural language question, a database call, a computation, and a final summary—without needing someone to press buttons at every step.

To see how this works in practice, let’s say you have sales data in an SQL database and want to automate weekly insights. With Langchain, you can set up a flow where every Monday, the system:

This starts by defining a SQLDatabaseChain in Langchain, which connects your language model to a database. You give the model permission to read from tables, describe your schema, and then let it translate natural language into SQL queries. For example, if you ask, “Which product categories saw the biggest drop in sales last week?”, the chain turns that into a safe query, runs it, and passes the result back to the model.

Now, suppose the model needs to do more—like run a statistical test, format a report, or compare trends. That’s where Python REPL tools or custom tools come in. Langchain allows the LLM to call Python code directly, which means it can do calculations, create visualizations, or generate reports dynamically.

You can also introduce agents into your flow. An agent is an LLM that decides which tool to use based on the question. For example, if the request is to summarize customer churn patterns, it may pull raw data, run some code to group by customer type, and then write a paragraph explaining the result. You don't need to hardcode every step—it's decided in real-time.

The final step might be a report writer chain that takes all this output and formats it for stakeholders. You can use Langchain to write natural-sounding emails, markdown reports, or dashboard posts based on the computed results. This end-to-end pipeline replaces what would normally take hours of manual querying, scripting, and summarizing.

Langchain is not magic. There are some things to think through when using it for data analysis. One issue is that LLMs are prone to errors when writing complex queries or misinterpreting ambiguous questions. To handle this, you should provide examples, describe your schema well, and use prompt engineering to guide the model. For instance, you can prompt it with: “Only use the columns date, revenue, and region from the sales table.” That makes it less likely to hallucinate columns or misuse data.

Another tip is to set validation steps. After the model generates a query or result, you can add a manual or automated check before it moves forward. For instance, validate that an SQL result returns rows or that a chart makes sense before embedding it in a report.

Security is another factor. When automating access to databases or tools, you need guardrails. Langchain offers ways to restrict what the LLM can do, such as allowing read-only access or limiting which tables it can touch. Always monitor what queries are being run, especially if end users are feeding in natural language prompts.

Scalability is worth thinking about, too. You may start with one agent doing one task. But as workflows grow, Langchain lets you combine multiple chains, introduce memory (for ongoing sessions), and build multi-step reasoning paths. You can store past reports, let the model compare weeks, and introduce context into future reports.

The real strength of using Langchain in data analysis is the balance between control and flexibility. You're not writing every step by hand. But you're not handing over full control to an unpredictable model, either. You build a framework where LLMs act like data assistants—smart enough to handle the routine work and guided enough not to go off track.

Langchain makes data analysis more efficient by automating routine steps like querying, summarizing, and reporting. It connects language models with tools and data sources in a controlled and repeatable manner. This reduces manual work and helps analysts focus on interpretation, not repetitive tasks. With the right setup and guardrails, Langchain can turn natural language questions into structured insights—making your data workflows faster, smarter, and easier to manage over time.

Advertisement

Intel’s deepfake detector promises accuracy but sparks ethical debates around privacy, data usage, and surveillance risks

Compare Microsoft, Copilot Studio, and custom AI to find the best solution for your business needs.

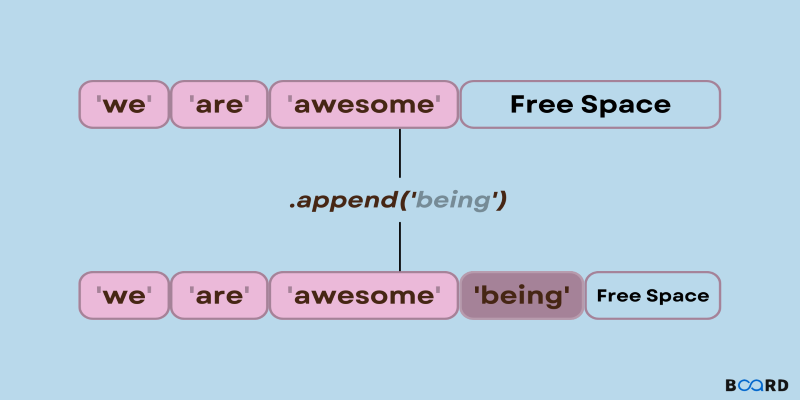

Need to add items to a Python list? Learn how append() works, what it does under the hood, and when to use it with confidence

Explore how AI innovates the business world and what the future of AI Transformation holds for the modern business world

How Netflix Case Study (EDA) reveals the data-driven strategies behind its streaming success, showing how viewer behavior and preferences shape content and user experience

Discover how Cerebras’ AI supercomputer outperforms rivals with wafer-scale design, low power use, and easy model deployment

Want to save time processing forms? Discover how Azure Form Recognizer extracts structured data from documents with speed, accuracy, and minimal setup

How depth2img pre-trained models improve image-to-image generation by using depth maps to preserve structure and realism in visual transformations

Lensa AI’s viral portraits raise concerns over user privacy, data consent, digital identity, representation, and ethical AI usage

How Google Bard’s latest advancements significantly improve its logic and reasoning abilities, making it smarter and more effective in handling complex conversations and tasks

Apple’s AI-powered RoomPlan uses LiDAR and AI to create accurate 3D room models that integrate seamlessly with top design apps

Celonis faces rising competition by evolving process mining with real-time insights, integration, and user-friendly automation