Advertisement

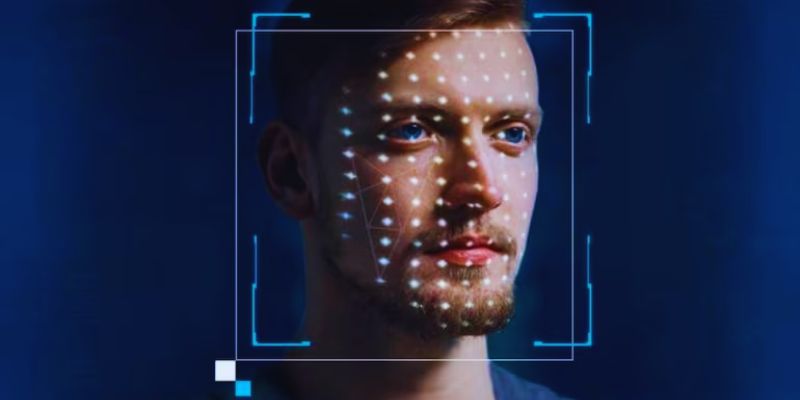

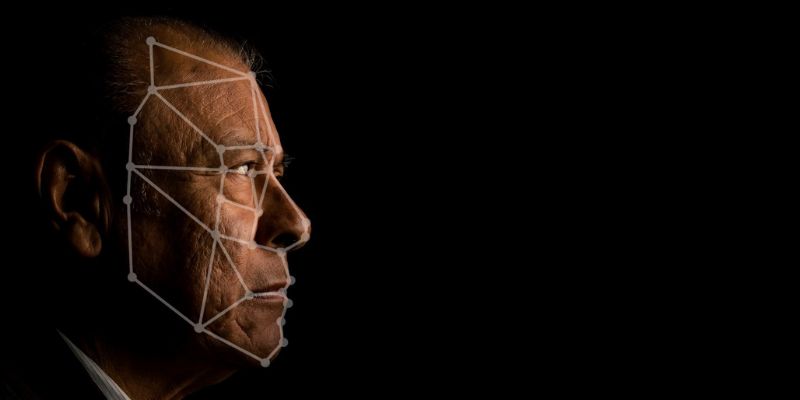

Intel recently unveiled a deepfake detection tool to identify manipulated images and videos. Intel’s deepfake detection systems use advanced neural networks and digital watermarking techniques to identify synthetic content. The tool aims to protect individuals from reputational harm and identity theft. Experts raise ethical concerns about data collection, storage practices, and privacy implications of artificial intelligence. There are concerns about potential misuse by governments and corporations for surveillance.

Although the detector demonstrates promising accuracy, it raises concerns about potential algorithmic bias. Companies and regulators must review guidelines governing these technologies. Intel’s initiatives have sparked discussions on deepfake threats and mitigation strategies. Sustainable innovation requires awareness of the risks associated with deepfake detection technologies. Transparency and accountability remain central concerns among stakeholders. The development of responsible AI practices will play a crucial role in shaping public trust.

Intel developed a deepfake detector that leverages convolutional neural networks and digital watermark analysis techniques. The model was trained on millions of real and manipulated media samples. It analyzes pixel patterns and noise artifacts to identify synthetic content. Intel’s deepfake detection technology runs efficiently on dedicated hardware accelerators. Initial tests show low false positive rates and high detection accuracy. The system supports real-time video analysis at up to 12 frames per second.

The Intel team focused on improving model explainability and optimizing performance. To enhance algorithm transparency, researchers integrated user feedback mechanisms. For each analysis, the detector logs metadata and includes confidence scores. Intel plans to release a developer toolkit for external integration soon. With continuous training updates, the model adapts to emerging deepfake techniques. To secure user privacy, Intel combines hardware and software protections. The detector’s design balances rigorous accuracy standards with real-time processing requirements.

Deepfake detection raises ethical concerns related to automated content moderation systems. There are concerns that algorithmic bias may disproportionately target certain demographic groups. Intel’s deepfake detection systems may reflect biases present in their training datasets. Transparency in reporting detection mistakes is required by ethical artificial intelligence and privacy issues. Stakeholders argue about who owns false positives and content removal. Detection techniques on platforms could be used to stifle valid criticism or expression. Researchers advocate for independent evaluations of data sources and detection techniques.

Open-source projects should promote accountability and foster diverse contributions. Ethical guidelines must address data handling and algorithmic decision-making. To explore ethical implications, Intel partnered with academic institutions. Constant communication between developers and civil society will help to produce morally better results. Regulators should change legal systems to compromise safety and expression rights. The argument draws attention to the requirement of multidisciplinary ethical review committees. Research on ethics has to incorporate several worldwide cultural and social angles.

Intel’s deepfake detection system analyzes user media to assess authenticity. The process may involve uploading videos or images to external servers for analysis. Users fear illegal data secondary exploitation or storage. Privacy advocates challenge retention rules for examined metadata and content. Policies regarding the storage duration of detection logs must be clear. Intel says it anonymizes data and removes samples following analysis. The greater trust would result from independent confirmation of deletion techniques.

Integrating this technology into social media platforms raises concerns regarding cross-border data transfer. Diverse regional privacy regulations complicate global deployment. Companies have to follow GDPR, CCPA, and other data protection rules. Transparency reports can go into great depth on applying privacy protections. Users should be in charge of choosing to opt in or out of analysis. Mechanisms for clear permission will honor personal privacy preferences. Strong encryption and safe pipelines help to reduce the hazards of illegal access. Working with privacy professionals improves general data management.

Potential for Misuse and Regulatory Gaps

Deepfake detection tools could be repurposed for mass surveillance or targeted attacks on dissenters. Authoritarian regimes might use these tools to identify and suppress dissidents. Companies could track workers or consumers without express permission. Intel’s detector highlights the dangers of unbridled application in delicate situations. Regulatory gaps let different applications of detecting technologies free from control. Policymakers have to close doors, allowing for negative uses. Industry self-regulation by itself might not stop bad actor abuse.

Clearly defined licensing regulations could restrict applications to approved use cases. Oversight agencies should conduct regular evaluations of high-risk projects. The standard of ethical and legal conformity can be enforced through public-private cooperation. Awareness initiatives could let consumers know about their rights under detection rules. Harmonizing policies between countries depends on international cooperation. Laws of the future have to cover operators of detecting tools and developers of deepfakes.

Balancing Innovation with Ethical Safeguards

Media trust benefits significantly from advancements in deepfake detection. However, ethical standards must guide both the development and application of these technologies. Privacy-by-design principles should be integrated into Intel’s deepfake detection system. Developers can include fairness constraints in model training procedures. Frequent ethical effect analyses would find early possible risks. Transparency portals could display freely visible detection performance statistics. Working with ethical consultants and community partners will improve tool design. Open communications help to match technical advancement with societal values.

Money for independent research can promote objective assessment. Intel and friends may fund outside validation programs. Good government calls for clear responsibility for instances involving misuse. Training courses should teach consumers sensible methods of using tools. AI engineers’ ethical education helps them to see such hazards. Businesses should set behavior policies for detection technology developers.

Intel’s detector signals a shift toward safer digital media ecosystems. Its deepfake detection system could help reduce misinformation and fraud. Ethical AI principles and privacy considerations must guide the system’s future development. Policymakers must establish clear guidelines to prevent the misuse of surveillance technologies. Researchers emphasize that algorithms should be transparent and fair. Greater public awareness of deepfake detection tools will foster trust. Collaboration between governments and technology companies will balance protection and innovation. This discourse highlights the importance of ethical AI and the need for responsible safeguards. The responsible and effective use of detection technologies requires ongoing oversight.

Advertisement

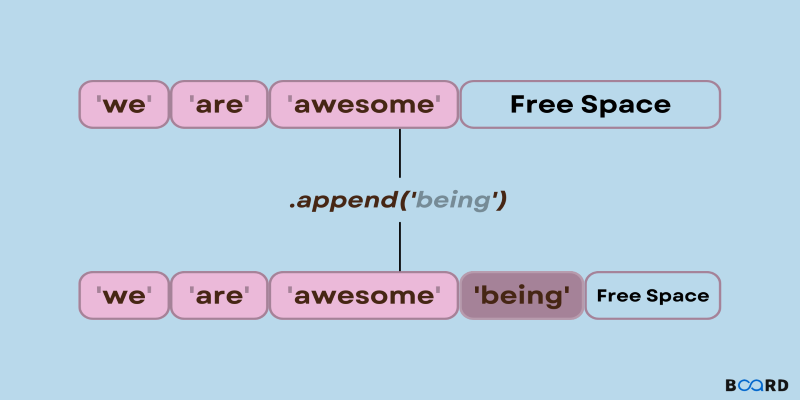

Need to add items to a Python list? Learn how append() works, what it does under the hood, and when to use it with confidence

Explore how AI innovates the business world and what the future of AI Transformation holds for the modern business world

Compare Microsoft, Copilot Studio, and custom AI to find the best solution for your business needs.

Discover how Dremio uses generative AI tools to simplify complex data queries and deliver faster, smarter data insights.

How depth2img pre-trained models improve image-to-image generation by using depth maps to preserve structure and realism in visual transformations

Explore key Alibaba Cloud challenges and understand why this AI cloud vendor faces hurdles in global growth and enterprise adoption.

Discover how Cerebras’ AI supercomputer outperforms rivals with wafer-scale design, low power use, and easy model deployment

How Netflix Case Study (EDA) reveals the data-driven strategies behind its streaming success, showing how viewer behavior and preferences shape content and user experience

Automation Anywhere uses AI to enhance process discovery, enabling faster insights, lower costs, and scalable transformation

Explore how AI agents are transforming the digital workforce in 2025. Discover roles, benefits, challenges, and future trends

Want to save time processing forms? Discover how Azure Form Recognizer extracts structured data from documents with speed, accuracy, and minimal setup

Google Cloud’s new AI tools enhance productivity, automate processes, and empower all business users across various industries