Advertisement

Cerebras’ artificial intelligence supercomputer transforms how deep learning tools are used in research. It offers several advantages that address emerging performance challenges. Cerebras is developing faster AI technology that is capable of efficiently processing massive datasets. Traditional servers often struggle to meet the growing demands for speed and computational power in AI workloads. By leveraging a wafer-scale engine and system-wide optimizations, Cerebras takes a fundamentally different approach. Its architecture improves training for complex models while significantly reducing latency.

Cerebras focuses on solving real-world challenges, such as language modeling and medical research, delivering rapid results across diverse fields. Few devices effectively combine ease of use, energy efficiency, and high performance. Today, many in the AI community regard it as a new gold standard. Its advanced architecture and robust software distinguish it from conventional high-performance computing systems.

With its wafer-scale engine, Cerebras leads the AI hardware market. The engine replaces many small chips with a single, large silicon wafer. This architecture increases bandwidth and reduces latency. Most AI systems rely on multiple interconnected GPUs, often resulting in power inefficiencies and communication delays. Cerebras solves these problems with a single wafer containing over 850,000 processing cores on one die. This design allows the system to run extremely large models seamlessly. AI developers can now build neural networks that exceed the limits of traditional GPUs. The architecture eliminates common performance bottlenecks, saving valuable time.

Data moves within the system without bouncing across external networks, ensuring efficient throughput. Fewer components mean fewer points of failure. The hardware remains cooler and operates with greater energy efficiency. As a result, AI teams can complete complex tasks faster using fewer machines. The wafer-scale design also greatly simplifies scaling as models grow in size. Cerebras preserve hardware simplicity even as workloads become more demanding. The wafer-scale engine is the most defining feature of Cerebras’ AI supercomputer.

Cerebras uses memory in an innovative way that is tailored to large AI models. Traditional systems distribute memory across multiple servers and GPUs, often leading to inefficiencies. Cerebras locates memory physically closer to the compute cores, enabling faster data access and reducing latency. Additionally, memory is shared through a unified pool, eliminating the need for complex data transfers between components. This configuration eliminates the necessity for data copying between departments. Artificial intelligence researchers find great delight in this capability for jobs like GPT or BERT models. They can teach big networks without changing codes.

Cerebras simplifies system programming for users. Its software layer automatically manages memory allocation, reducing the need for manual configuration. Research drives engineers more than machine tuning. With this arrangement, deep learning tasks are simplified and accelerated. Performance remains constant even as chores get more involved. The use of memory does not restrict model size, greatly benefiting systems based on GPUs. It’s another element unique to Cerebras.

Supercomputing faces significant challenges related to power consumption. Traditional systems require large amounts of power to manage data flow and cooling. Cerebras tackles this with a low-energy consumption architecture. It substitutes for several linked chips. Fewer wires, fans, and network switches follow from this. Less energy is consumed when data is shifted between sections. All the work is done on one wafer-scale chip. Even at the highest training loads, power consumption remains minimal.

Cerebras delivers high performance without incurring significant energy costs. It also supports sustainability goals aligned with green computing initiatives. Many hospitals and research labs demand low emissions. Cerebras enables them to do it while still executing significant artificial intelligence projects. The chip's design makes more ventilation and temperature control possible. Extra cooling arrangements are not needed. Cerebras produces more than several GPU clusters for every watt needed. It qualifies perfectly for long-term artificial intelligence installations. Setting Cerebras’ AI system apart from its competitors mostly depends on efficiency.

Cerebras’ software tools also stand out for their usability and performance. Programming and maintaining AI systems is often complex and time-consuming, but Cerebras simplifies these tasks with its CS Software toolset. It enables users to migrate their models from TensorFlow and PyTorch underframes—no need to pick up fresh coding structures. The program fits your workflow. You can apply models using fewer steps.

It also enables faster and simpler debugging. The CS Software manages low-level operations such as load balancing and memory mapping, which reduces human error and saves valuable development time. It allows users to optimize performance without needing deep hardware expertise. Technical tuning worries researchers not at all. The instruments facilitate many different artificial intelligence uses. A plug-and-play fix for many teams is CS Software. Among the more approachable systems out there is this one. One obvious reason Cerebras leads the group is ease of deployment.

Cerebras are actively used in real-world projects—not just in theoretical research. Researchers use it in the medical field to develop disease models. It accelerates the analysis of cancer and genetics. Faster results enable doctors to treat their patients better. In national labs, it manages big simulations. These cover physics, global warming, and weapon safety. It also helps foresee trends in money, so guiding behavior. Companies can run models quicker and make better judgments.

Natural language processing relies on training language models with billions of parameters. It advances sentiment analysis, text summarization, and machine translation applications. Cerebras also supports researchers in space science and astrophysics. NASA and several other organizations process mission data using it. It covers several chores without any code modifications. Because of its simplicity, speed, and scope, industry leaders pick Cerebras. Every day, it proves itself in mission-critical roles. Practical value in many different fields reveals system performance. Not only tech specs but actual outcomes make this a great pick.

Cerebras’ AI supercomputer stands out in chip architecture, model training, and software performance. It combines fast memory access, wafer-scale architecture, and energy-efficient design. Its user-friendly software tools help researchers accelerate deployment. The system has proven its value through consistent performance across multiple sectors. Enhanced cloud access and future upgrades will further expand its capabilities. Cerebras is shaping the future of deep learning by efficiently addressing real-world challenges. Its bold strategy continues to raise the bar in the highly competitive AI market.

Advertisement

Discover how resume companies are using non-biased generative AI to ensure fair, inclusive, and accurate hiring decisions.

Discover 7 effective ways to accelerate AI software development and enhance speed, scalability, and innovation in 2025.

Explore how AI innovates the business world and what the future of AI Transformation holds for the modern business world

Are you overestimating your Responsible AI maturity? Discover key aspects of AI governance, ethics, and accountability for sustainable success

Discover how Cerebras’ AI supercomputer outperforms rivals with wafer-scale design, low power use, and easy model deployment

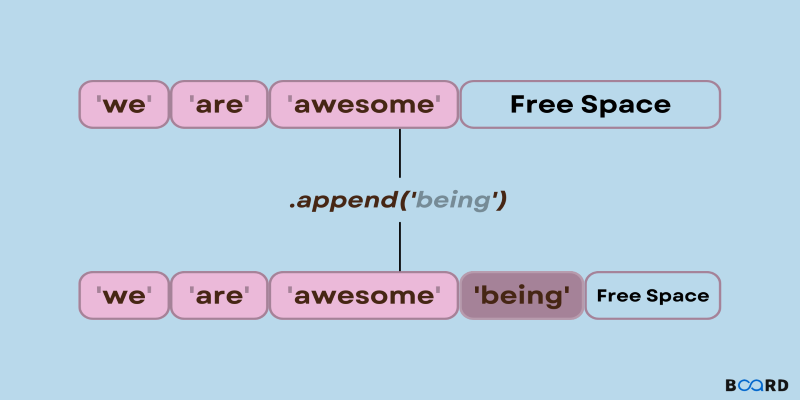

Need to add items to a Python list? Learn how append() works, what it does under the hood, and when to use it with confidence

How depth2img pre-trained models improve image-to-image generation by using depth maps to preserve structure and realism in visual transformations

Intel’s deepfake detector promises accuracy but sparks ethical debates around privacy, data usage, and surveillance risks

Master GPT-4.1 prompting with this detailed guide. Learn techniques, tips, and FAQs to improve your AI prompts

Salesforce advances secure, private generative AI to boost enterprise productivity and data protection

Automation Anywhere uses AI to enhance process discovery, enabling faster insights, lower costs, and scalable transformation

How Netflix Case Study (EDA) reveals the data-driven strategies behind its streaming success, showing how viewer behavior and preferences shape content and user experience